The best base class for most tests is django.test.TestCase. This test class creates a clean database before its tests are run, and runs every test function in its own transaction. The class also owns a test Client that you can use to simulate a user interacting with the code at the view level. Basic example¶ The unittest module provides a rich set of tools for constructing and running tests.

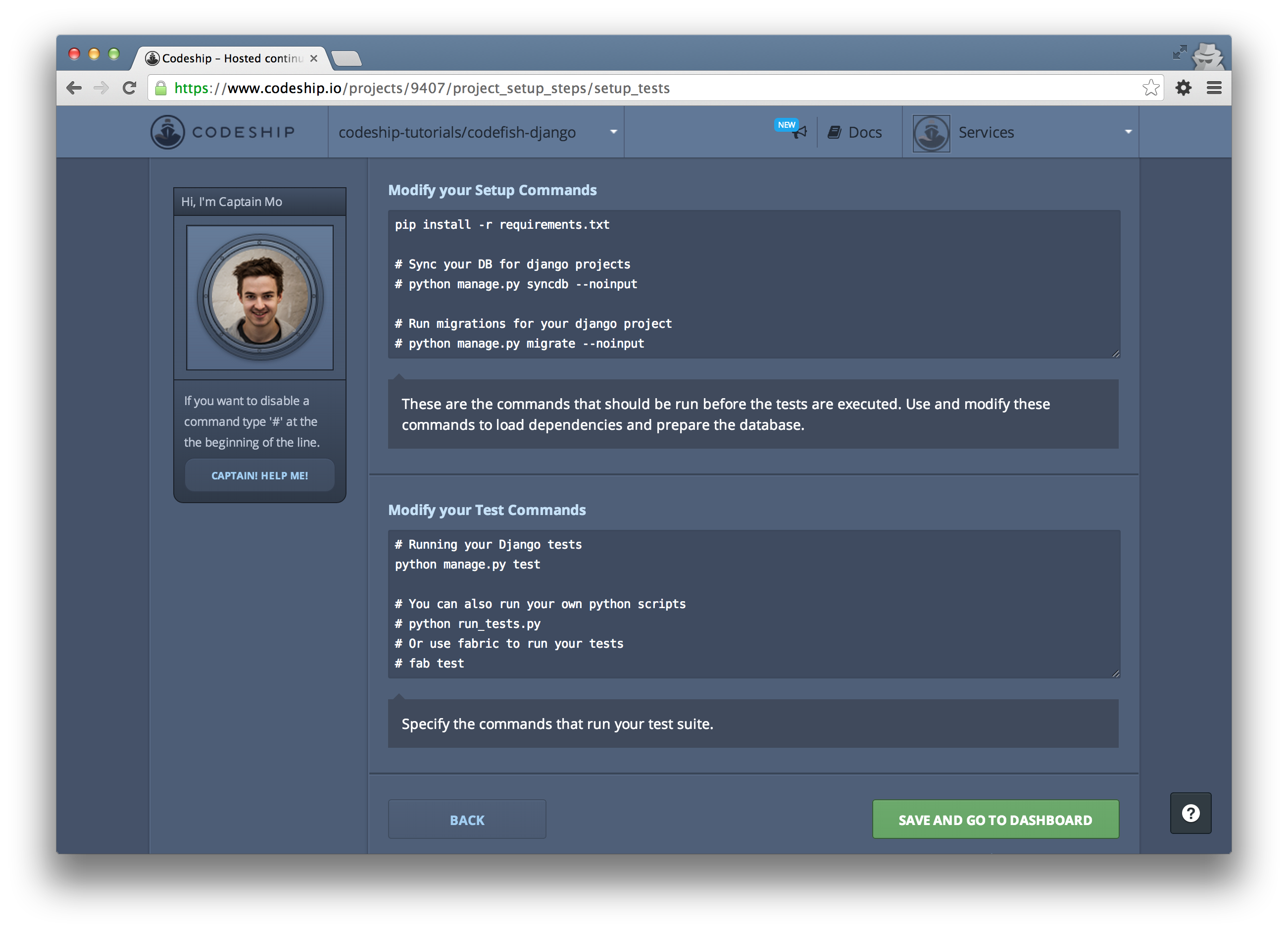

Introduction to Python/Django testing: Basic Unit Tests¶. Last post we talked about how to set up and use doc tests inside of Django. Today, in the second post of the series, we’ll be talking about how to use the other testing framework that comes with Python, unittest. Unittest is a xUnit type of testing system (JUnit from the Java world is another example) implemented in Python. Select Django from the dropdown and VS Code will populate a new launch.json file with a Django run configuration. The launch.json file contains a number of debugging configurations, each of which is a separate JSON object within the configuration array.

Generally, PyCharm runs and debugs tests in the same way as other applications, by running the run/debug configurations you have created. When doing so, it passes the specified test classes or methods to the test runner.

Running the test suite with pytest offers some features that are not present in Django’s standard test mechanism: Less boilerplate: no need to import unittest, create a subclass with methods. Just write tests as regular functions. Manage test dependencies with fixtures. Run tests in multiple processes for increased speed.

In many cases, you can initiate a testing session from a context menu. For this purpose, the Run and Debug commands are provided in certain context menus. For example, these commands are available for a test class, directory, or a package in the Project tool window. They are also available for a test class or method you are currently working on in the editor.

If you run a test for which there is no permanent run/debug configuration, a temporary configuration is created. You can then save such a configuration using the Run/debug configuration dialog if you want to reuse it later.

The tests run in the background, so you can execute several tests at the same time.

Each running configuration gets its own tab in the Run tool window (the Test Results tab). One tab can aggregate several tests.

Note also that the commands shown in the context menu, are context-sensitive, that is the testing command that shows depends on the test runner and the place where this command is invoked.

Run or debug a test

To start running or debugging a test, you can use the main toolbar or a context menu in the Project tool window or in the editor:

Use the main toolbar:

Select the necessary run/debug configuration from the list on the main toolbar.

Press Alt+Shift+F10 to see the list of available run configurations or Alt+Shift+F9 for debug configurations.

Click Run or Debug to the right of the list. Alternatively, select Run | RunShift+F10 or Run | DebugShift+F9 from the main menu.

Use a context menu:

Right-click a test file or test class in the Project tool window or open it in the editor, and right-click the background. From the context menu, select Run <class name>/ Run <filename> or Debug...

For a test method, open the class in the editor and right click anywhere in the method. The context menu suggests the command Run / Debug <method name>.

Run all tests in a directory

In the Project tool window, select the directory that contains tests to be executed.

From the context menu, select the corresponding run command.

If the directory contains tests that belong to the different testing frameworks, select the configuration to be used.

For example, select Run pytest in <directory name>'.

Explore results in the test runner.

For Django versions 1.1 and later, PyCharm supports custom test runner, if this test runner is a class.

By default, all tests are executed one by one. You can change this behavior for the pytest testing framework and execute your tests in parallel.

Run tests in parallel

Enable test multiprocessing to optimize execution of your pytest tests.

To explicitly specify the number of CPUs for test execution:

Install the pytest-xdist package as described in Install, uninstall, and upgrade packages.

Specify pytest as the project testing framework. See Testing frameworks for more details.

Select Edit configurations.. from the list of the run/debug configurations on the main toolbar. In the Run/Debug Configurations dialog, expand the Pytest tests group, and select pytest in <directory name>.

PyCharm creates this configuration when you run all tests in a directory for the very first time. If you haven't executed the tests yet, click the icon and specify the run/debug Configuration parameters.

Adobe premiere pro cc 2015 amtlib dll file download. In the Run/Debug Configurations dialog, in the Additional Arguments field specify the number of the CPUs to run the tests:

-n <number of CPUs>and save the changes.Now run all the tests in the directory again and inspect the output in the Run tool window. In the shown example, The total execution time is 12s 79ms as compared to 30s 13ms when running the same tests consequentially. The test report provides information about the CPUs used to run the tests and execution time.

Alternatively, you can specify the number of CPUs to run your tests in the pytest.ini file. For example,

If you specify different values of CPU numbers in the pytest.ini file and the run/debug configuration, the latter takes precedence over the settings in the pytest.ini file.

If you can stop a running tests, all running tests stop immediately. Icons of tests in the Run tool window reflect status of the test (passed, failed, aborted).

Terminate test execution

In the Run tool window, click the Stop button .

Alternatively, press Ctrl+F2.

Code coverage is a simple tool for checking which lines of your application code are run by your test suite.100% coverage is a laudable goal, as it means every line is run at least once.

Coverage.py is the Python tool for measuring code coverage.Ned Batchelder has maintained it for an incredible 14 years!

I like adding Coverage.py to my Django projects, like fellow Django Software Foundation member Sasha Romijn.

Let’s look at how we can integrate it with a Django project, and how to get that golden 100% (even if it means ignoring some lines).

Configuring Coverage.py¶

Install coverage with pip install coverage.It includes a C extension for speed-up, it’s worth checking that this installs properly - see the installation docs for information.

Then set up a configuration file for your project.The default file name is .coveragerc, but since that’s a hidden file I prefer to use the option to store the configuration in setup.cfg.

This INI file was originally used only by setuptools but now many tools have the option to read their configuration from it.For Coverage.py, we put our settings there in sections prefixed with coverage:.

The Run Section¶

This is where we tell Coverage.py what coverage data to gather.

We tell Coverage.py which files to check with the source option.In a typical Django project this is as easy as specifying the current directory (source = .) or the app directory (source = myapp/*).Add it like so:

(Remove the coverage: if you’re using .coveragerc.).

An issue I’ve seen on a Django project is Coverage.py finding Python files from a nested node_modules.It seems Python is so great even JavaScript projects have a hard time resisting it!We can tell coverage to ignore these files by adding omit = */node_modules/*.

When you come to a fork in the road, take it.

—Yogi Berra

An extra I like to add is branch coverage.This ensures that your code runs through both the True and False paths of each conditional statement.You can set this up by adding branch = True in your run section.

As an example, take this code:

With branch coverage off, we can get away with tests that pass in a red widget.Really, we should be testing with both red and non-red widgets.Branch coverage enforces this, by counting both paths from the if.

The Report Section¶

This is where we tell Coverage.py how to report the coverage data back to us.

I like to add three settings here.

fail_under = 100requires us to reach that sweet 100% goal to pass.If we’re under our target, the report command fails.show_missing = Trueadds a column to the report with a summary of which lines (and branches) the tests missed.This makes it easy to go from a failure to fixing it, rather than using the HTML report.skip_covered = Trueavoids outputting file names with 100% coverage.This makes the report a lot shorter, especially if you have a lot of files and are getting to 100% coverage.

Add them like so:

(Again, remove the coverage: prefix if you’re using .coveragerc.)

Template Coverage¶

Your Django project probably has a lot of template code.It’s a great idea to test its coverage too.This can help you find blocks or whole template files that the tests didn’t run.

Lucky for us, the primary plugin listed on the Coverage.py plugins page is the Django template plugin.

See the django_coverage_plugin PyPI page for its installation instructions.It just needs a pip install and activation in [coverage:run].

Git Ignore¶

If your project is using Git, you’ll want to ignore the files that Coverage.py generates.GitHub’s default Python .gitignore already ignores Coverage’s file.If your project isn’t using this, add these lines in your .gitignore:

Using Coverage in Tests¶

Run Django Tests For Dummies

This bit depends on how you run your tests.I prefer using pytest with pytest-django.However many, projects use the default Django test runner, so I’ll describe that first.

With Django’s Test Runner¶

If you’re using manage.py test, you need to change the way you run it.You need to wrap it with three coverage commands like so:

99% - looks like I have a little bit of work to do on my test application!

Having to run three commands sucks.That’s three times as many commands as before!

We could wrap the tests with a shell script.You could add a shell script with this code:

Update (2020-01-06):Previously the below section recommended a custom test management command.However, since this will only be run after some imports, it's not possible to record 100% coverage this way.Thanks to Hervé Le Roy for reporting this.

However, there’s a more integrated way of achieving this inside Django.We can patch manage.py to call Coverage.py’s API to measure when we run the test command.Here’s how, based on the default manage.py in Django 3.0:

Notes:

The two customizations are the blocks before and after the

execute_from_command_lineblock, guarded withif running_tests:.You need to add

manage.pytoomitin the configuration file, since it runs before coverage starts.For example:(It's fine, and good, to put them on multiple lines.Ignore the furious red from my blog's syntax highlighter.)

The

.report()method doesn’t exit for us like the commandline method does.Instead we do our own test on the returnedcoveredamount.This means we can removefail_underfrom the[coverage:report]section in our configuration file.

Run the tests again and you'll see it in use:

Yay!

Voice instructions imperial zip. (Okay, it’s still 99%.Spoiler: I’m actually not going to fix that in this post because I’m lazy.)

With pytest¶

It’s less work to set up Coverage testing in the magical land of pytest.Simply install the pytest-cov plugin and follow its configuration guide.

The plugin will ignore the [coverage:report] section and source setting in the configuration, in favour of its own pytest arguments.We can set these in our pytest configuration’s addopts setting.For example in our pytest.ini we might have:

(Ignore the angry red from my blog’s syntax highlighter.)

Run pytest again and you’ll see the coverage report at the end of the pytest report:

Hooray!

(Yup, still 99%.)

Run Django Tests Positive

Browsing the Coverage HTML Report¶

The terminal report is great but it can be hard to join this data back with your code.Looking at uncovered lines requires:

- Remembering the file name and line numbers from the terminal report

- Opening the file in your text editor

- Navigating to those lines

- Repeat for each set of lines in each file

This gets tiring quickly!

Coverage.py has a very useful feature to automate this merging, the HTML report.

After running coverage run, the coverage data is stored in the .coverage file.Run this command to generate an HTML report from this file:

This creates a folder called htmlcov.Open up htmlcov/index.html and you’ll see something like this:

Click on an individual file to see line by line coverage information:

The highlighted red lines are not covered and need work.

Django itself uses this on its Jenkins test server.See the “HTML Coverage Report” on the djangoci.com project django-coverage.

With PyCharm¶

Coverage.py is built-in to this editor, in the “Run <name> with coverage” feature.

This is great for individual development but less so for a team as other developers may not use PyCharm.Also it won’t be automatically run in your tests or your Continuous Integration pipeline.

See more in this Jetbrains feature spotlight blog post.

Is 100% (Branch) Coverage Too Much?¶

Some advocate for 100% branch coverage on every project.Others are skeptical, and even believe it to be a waste of time.

For examples of this debate, see this Stack Overflow question and this one.

Like most things, it depends.

First, it depends on your project’s maturity.If you’re writing an MVP and moving fast with few tests, coverage will definitely slow you down.But if your project is supporting anything of value, it’s an investment for quality.

Second, it depends on your tests.If your tests are low quality, Coverage won’t magically improve them.That said, it can be a tool to help you work towards smaller, better targeted tests.

100% coverage certainly does not mean your tests cover all scenarios.Indeed, it’s impossible to cover all scenarios, due to the combinatorial explosion from multiplying branches.(See all-pairs testing for one way of tackling this explosion.)

Third, it depends on your code.Certain types of code are harder to test, for example branches dealing with concurrent conditions.

IF YOU’RE HAVING CONCURRENCY PROBLEMS I FEEL BAD FOR YOU SON

99 AIN’T GOT I BUT PROBLEMS CONCURRENCY ONE

—[@quinnypig on Twitter](https://twitter.com/QuinnyPig/status/1110567694837800961)

Some tools, such as unittest.mock, help us reach those hard branches.However, it might be a lot of work to cover them all, taking time away from other means of verification.

Fourth, it depends on your other tooling.If you have good code review, quality tests, fast deploys, and detailed monitoring, you already have many defences against bugs.Perhaps 100% coverage won’t add much, but normally these areas are all a bit lacking or not possible.For example, if you’re working a solo project, you don’t have code review, so 100% coverage can be a great boon.

To conclude, I think that coverage is a great addition to any project, but it shouldn’t be the only priority.A pragmatic balance is to set up Coverage for 100% branch coverage, but to be unafraid of adding # pragma: no cover.These comments may be ugly, but at least they mark untested sections intentionally.If no cover code crashes in production, you should be less surprised.

Also, review these comments periodically with a simple search.You might learn more and change your mind about how easy it is to test those sections.

Fin¶

Go forth and cover your tests!

If you used this post to improve your test suite, I’d love to hear your story.Tell me via Twitter or email - contact details are on the front page.

—Adam

Thanks to Aidas Bendoraitis for reviewing this post.

🎉 My book Speed Up Your Django Tests is now up to date for Django 3.2. 🎉

Buy now on Gumroad

One summary email a week, no spam, I pinky promise.

Related posts:

Tags:django

© 2019 All rights reserved.